Understanding Neural Networks: A Comprehensive Guide

Neural networks represent one of the most significant breakthroughs in artificial intelligence, enabling machines to learn complex patterns and make intelligent decisions. This comprehensive guide explores the fundamental concepts, architecture, and applications of neural networks in modern AI systems.

What Are Neural Networks?

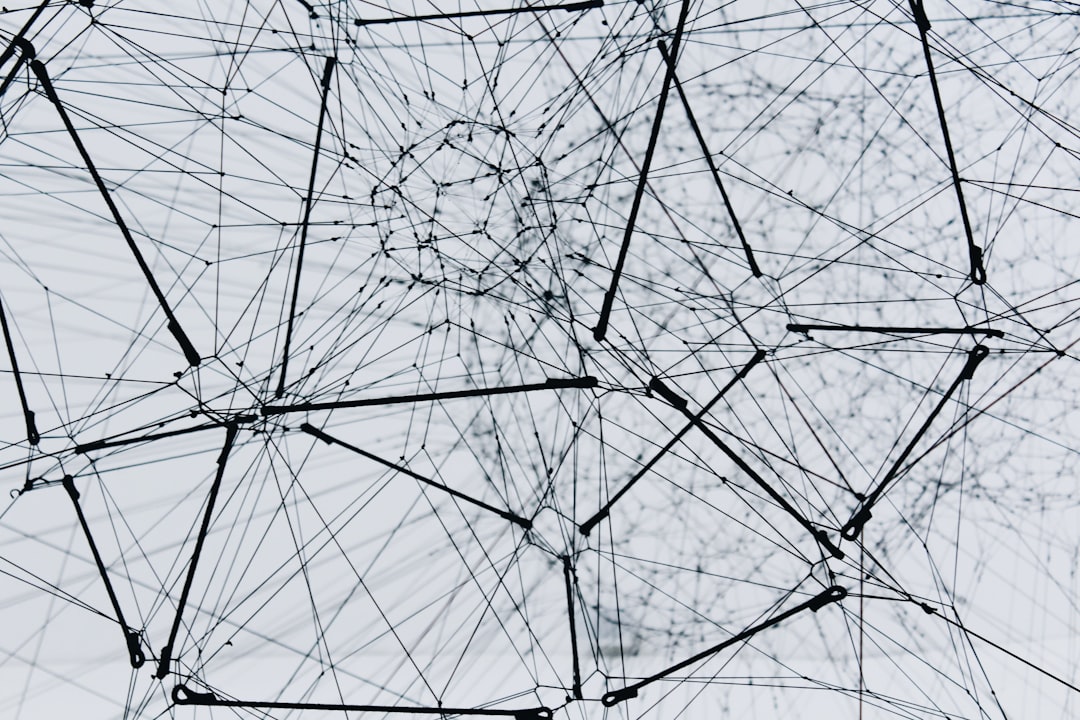

Neural networks are computational models inspired by the biological neural networks in human brains. They consist of interconnected nodes or neurons organized in layers, each processing information and passing it forward. These networks excel at recognizing patterns, classifying data, and making predictions based on training examples.

The basic structure includes an input layer that receives data, hidden layers that process information through weighted connections, and an output layer that produces results. Through a process called backpropagation, neural networks adjust their internal parameters to minimize errors and improve accuracy over time.

Architecture and Components

Understanding neural network architecture is crucial for implementing effective AI solutions. The network consists of multiple layers, each serving a specific purpose in the learning process. Input layers receive raw data, whether images, text, or numerical values. Hidden layers perform complex transformations through activation functions and weighted connections.

Activation functions introduce non-linearity into the network, enabling it to learn complex relationships. Common activation functions include ReLU, sigmoid, and tanh, each with unique characteristics suitable for different applications. The choice of activation function significantly impacts network performance and training efficiency.

Training Neural Networks

Training involves presenting the network with labeled examples and adjusting weights to minimize prediction errors. The process uses optimization algorithms like gradient descent to find optimal parameter values. Learning rate, batch size, and epochs are critical hyperparameters that determine training effectiveness and convergence speed.

Modern training techniques employ regularization methods to prevent overfitting, including dropout, L1/L2 regularization, and batch normalization. These approaches help networks generalize better to unseen data and maintain robust performance across different scenarios.

Types of Neural Networks

Different neural network architectures excel at specific tasks. Convolutional Neural Networks specialize in image processing and computer vision applications. Recurrent Neural Networks handle sequential data like text and time series. Transformer architectures have revolutionized natural language processing with attention mechanisms.

Each architecture incorporates unique design principles optimized for particular data types and learning objectives. Understanding these variations helps practitioners select appropriate models for their specific use cases and requirements.

Real-World Applications

Neural networks power numerous applications across industries. In healthcare, they assist in medical diagnosis and drug discovery. Financial institutions use them for fraud detection and algorithmic trading. Autonomous vehicles rely on neural networks for object detection and decision-making.

Natural language processing applications include machine translation, sentiment analysis, and chatbots. Computer vision enables facial recognition, image classification, and autonomous robotics. The versatility of neural networks continues to expand as researchers develop new architectures and training methods.

Challenges and Considerations

Despite their power, neural networks face several challenges. They require substantial computational resources and large datasets for training. Interpretability remains a concern, as understanding why networks make specific decisions can be difficult. Bias in training data can lead to unfair or discriminatory outcomes.

Researchers actively work on addressing these challenges through explainable AI techniques, efficient architectures, and fairness-aware algorithms. Understanding limitations helps practitioners deploy neural networks responsibly and effectively.

Getting Started with Neural Networks

Learning neural networks requires foundational knowledge in mathematics, including linear algebra, calculus, and probability. Programming skills in Python and familiarity with frameworks like TensorFlow or PyTorch are essential. Starting with simple projects and gradually increasing complexity helps build practical expertise.

Online courses, tutorials, and hands-on projects provide valuable learning resources. Engaging with the AI community through forums, conferences, and open-source contributions accelerates skill development and keeps practitioners updated on latest advances.

Conclusion

Neural networks represent a transformative technology with vast potential across numerous domains. Understanding their principles, architectures, and applications equips professionals to leverage AI effectively. As the field continues evolving, staying informed about new developments and best practices remains crucial for success in AI and machine learning.